When would you want to use it?

Great Expectations is an open-source library that helps keep the quality of your data in check through data testing, documentation, and profiling, and to improve communication and observability. Great Expectations works with tabular data in a variety of formats and data sources, of which ZenML currently supports onlypandas.DataFrame as part of its pipelines.

You should use the Great Expectations Data Validator when you need the following data validation features that are possible with Great Expectations:

- Data Profiling: generates a set of validation rules (Expectations) automatically by inferring them from the properties of an input dataset.

- Data Quality: runs a set of predefined or inferred validation rules (Expectations) against an in-memory dataset.

- Data Docs: generate and maintain human-readable documentation of all your data validation rules, data quality checks and their results.

How do you deploy it?

The Great Expectations Data Validator flavor is included in the Great Expectations ZenML integration, you need to install it on your local machine to be able to register a Great Expectations Data Validator and add it to your stack:- let ZenML initialize and manage the Great Expectations configuration. The Artifact Store will serve as a storage backend for all the information that Great Expectations needs to persist (e.g. Expectation Suites, Validation Results). However, you will not be able to setup new Data Sources, Metadata Stores or Data Docs sites. Any changes you try and make to the configuration through code will not be persisted and will be lost when your pipeline completes or your local process exits.

- use ZenML with your existing Great Expectations configuration. You can tell ZenML to replace your existing Metadata Stores with the active ZenML Artifact Store by setting the

configure_zenml_storesattribute in the Data Validator. The downside is that you will only be able to run pipelines locally with this setup, given that the Great Expectations configuration is a file on your local machine. - migrate your existing Great Expectations configuration to ZenML. This is a compromise between 1. and 2. that allows you to continue to use your existing Data Sources, Metadata Stores and Data Docs sites even when running pipelines remotely.

great_expectations.yaml configuration file is located:

@ operator, e.g.:

Advanced Configuration

The Great Expectations Data Validator has a few advanced configuration attributes that might be useful for your particular use-case:configure_zenml_stores: if set, ZenML will automatically update the Great Expectation configuration to include Metadata Stores that use the Artifact Store as a backend. If neithercontext_root_dirnorcontext_configare set, this is the default behavior. You can set this flag to use the ZenML Artifact Store as a backend for Great Expectations with any of the deployment methods described above. Note that ZenML will not copy the information in your existing Great Expectations stores (e.g. Expectation Suites, Validation Results) in the ZenML Artifact Store. This is something that you will have to do yourself.configure_local_docs: set this flag to configure a local Data Docs site where Great Expectations docs are generated and can be visualized locally. Use this in case you don’t already have a local Data Docs site in your existing Great Expectations configuration.

How do you use it?

The core Great Expectations concepts that you should be aware of when using it within ZenML pipelines are Expectations / Expectation Suites, Validations and Data Docs. ZenML wraps the Great Expectations functionality in the form of two standard steps:-

a Great Expectations data profiler that can be used to automatically generate Expectation Suites from an input

pandas.DataFramedataset -

a Great Expectations data validator that uses an existing Expectation Suite to validate an input

pandas.DataFramedataset

The Great Expectations data profiler step

The standard Great Expectations data profiler step builds an Expectation Suite automatically by running a UserConfigurableProfiler on an inputpandas.DataFrame dataset. The generated Expectation Suite is saved in the Great Expectations Expectation Store, but also returned as an ExpectationSuite artifact that is versioned and saved in the ZenML Artifact Store. The step automatically rebuilds the Data Docs.

At a minimum, the step configuration expects a name to be used for the Expectation Suite:

pandas.DataFrame dataset, and it returns a Great Expectations ExpectationSuite object:

The Great Expectations data validator step

The standard Great Expectations data validator step validates an inputpandas.DataFrame dataset by running an existing Expectation Suite on it. The validation results are saved in the Great Expectations Validation Store, but also returned as an CheckpointResult artifact that is versioned and saved in the ZenML Artifact Store. The step automatically rebuilds the Data Docs.

At a minimum, the step configuration expects the name of the Expectation Suite to be used for the validation:

pandas.DataFrame dataset and a boolean condition and it returns a Great Expectations CheckpointResult object. The boolean condition is only used as a means of ordering steps in a pipeline (e.g. if you must force it to run only after the data profiling step generates an Expectation Suite):

Call Great Expectations directly

You can use the Great Expectations library directly in your custom pipeline steps, while leveraging ZenML’s capability of serializing, versioning and storing theExpectationSuite and CheckpointResult objects in its Artifact Store. To use the Great Expectations configuration managed by ZenML while interacting with the Great Expectations library directly, you need to use the Data Context managed by ZenML instead of the default one provided by Great Expectations, e.g.:

The Great Expectations ZenML Visualizer

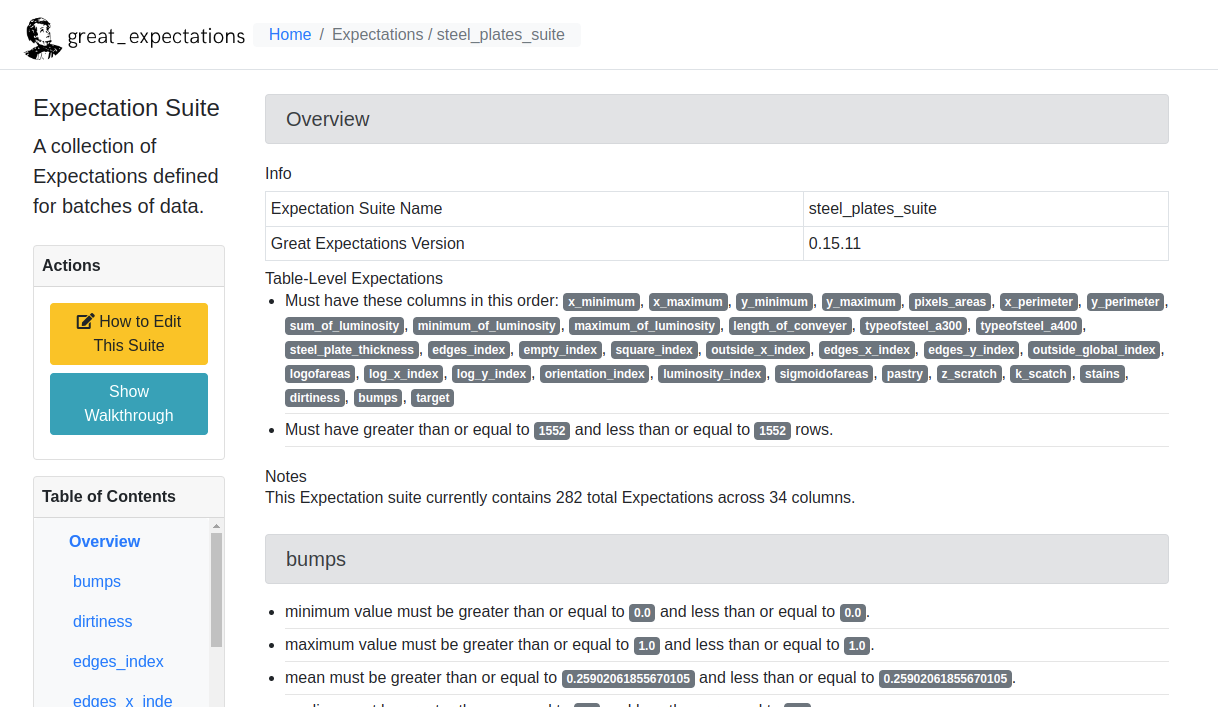

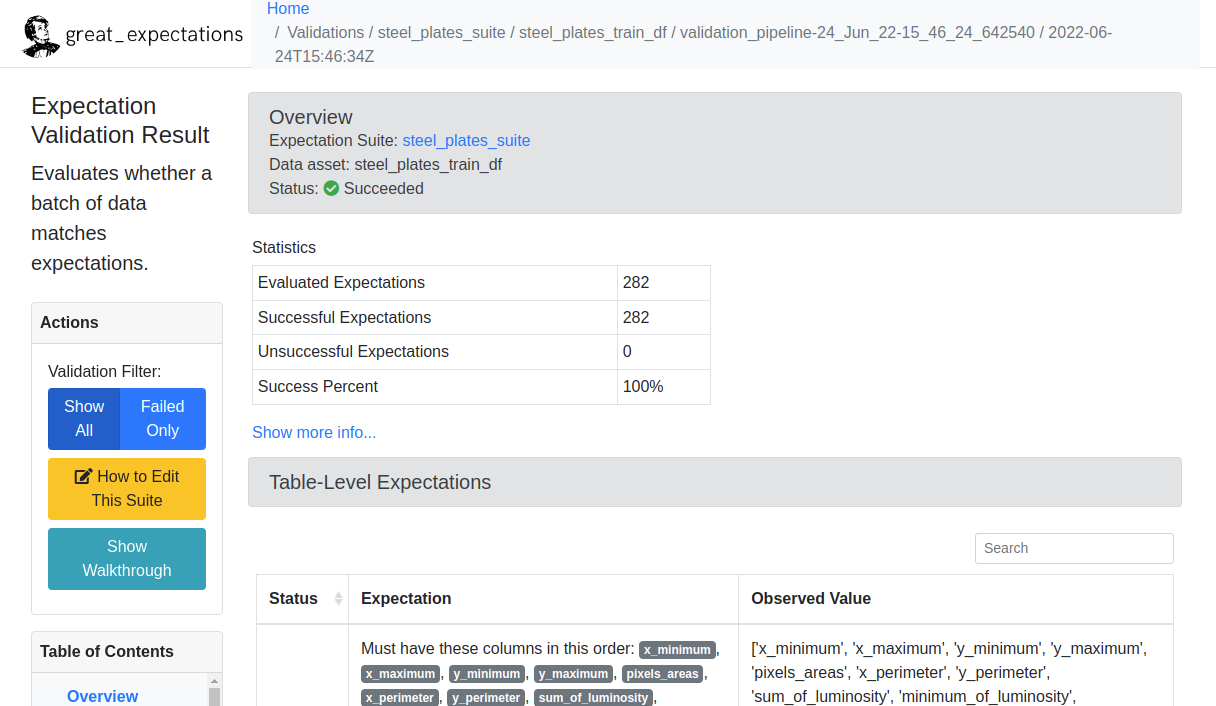

In the post-execution workflow, you can view the Expectation Suites and Validation Results generated and returned by your pipeline steps in the Great Expectations Data Docs by means of the ZenML Great Expectations Visualizer, e.g.: Expectations Suite Visualization

Expectations Suite Visualization

Validation Results Visualization

Validation Results Visualization